I've been spending an increasing amount of time using Docker's various AI features lately. Here's the easy guide to each and how to get started:

Note: these tools are changing fast, and I expect them to get better over time. This is written in May 2025, and if I come back to update it, I'll indicate changes.

Three AI features launched in early 2025

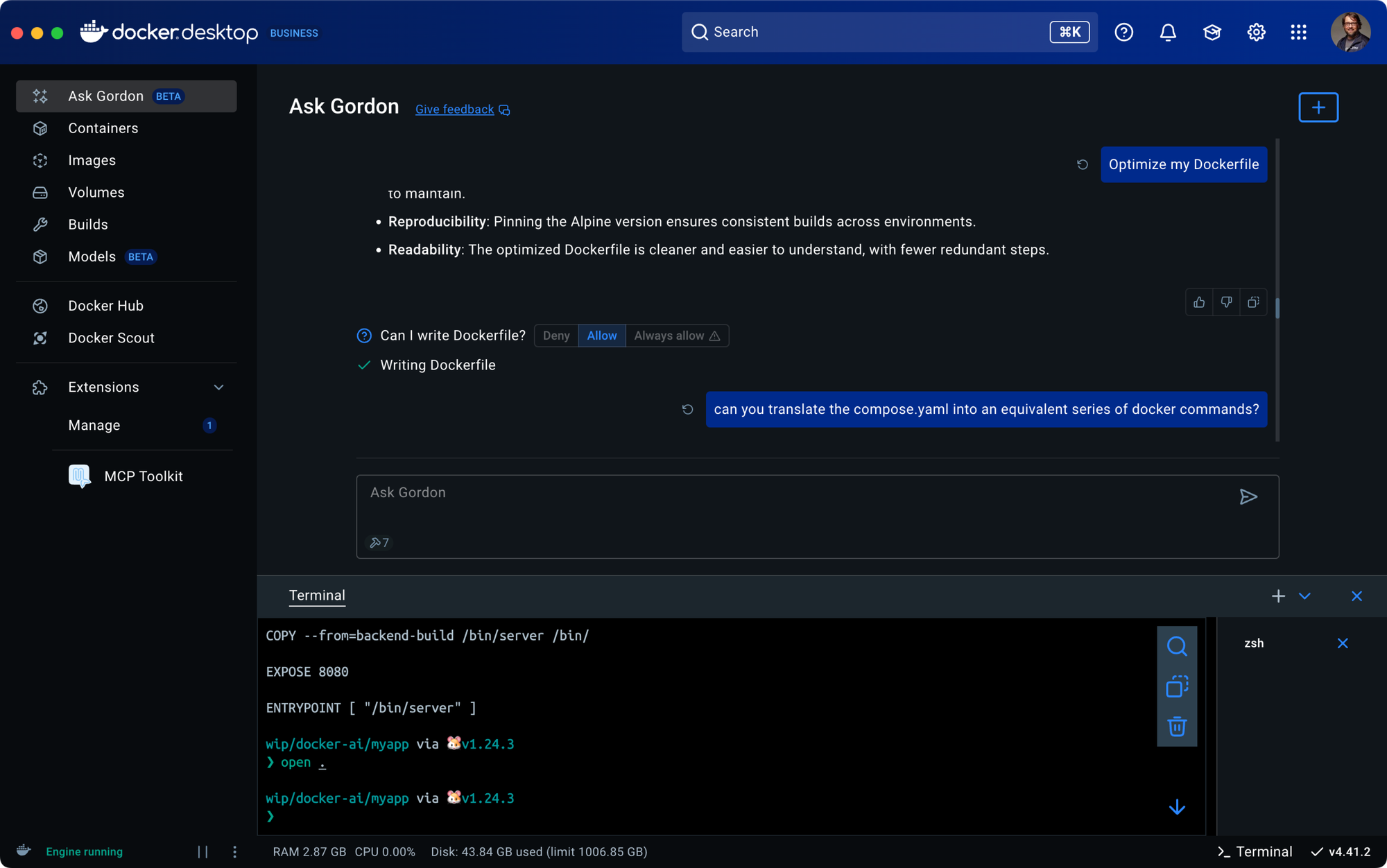

Ask Gordon: Docker-Desktop-specific basic AI chat for helping with Docker tools and commands.

Docker Model Runner: Runs a set of popular, free LLMs from Docker's AI model catalog for use with your local (and eventually server) apps.

Docker MCP Toolkit: A Docker Desktop extension + MCP Server tool catalog that lets you run MCP-enabled tools for giving LLMs new abilities.

AI Feature #1: Ask Gordon AI chatbot

TL;DR: Gordon is a free AI to help you with Docker tasks, but if you're paying for other AI chat or IDE tools, you'll likely skip Gordon AI for what's built into those.

At its core, this is a Docker-focused ChatGPT, built into the Docker Desktop GUI and CLI. It gives results on par with OpenAI's latest models, and also adds documentation links to its answers. Think of it as a more Docker-focused, up-to-date chatbot than what you'd likely get with the big foundation models. It continues to see improvement and has several advantages over general AI chatbots. The question is, will you use it in addition to other LLMs you're already using?

Pros:

- It can read/write files on disk and execute Docker commands, if you let it.

- It can run from the Docker GUI or the

docker aiCLI. - It now has MCP Toolkit access, so it can become aware of your local Docker and Kubernetes resources, and even control any MCP tools you add as Docker Desktop extensions. I plan to create a video on how this works. Stay tuned.

Cons:

- Doesn't store history of chats or allow you to have more than one chat thread.

- Slow. I believe it's using an OpenAI model on the backend, but it feels slower than the default ChatGPT models.

- Doesn't answer questions outside Docker or dev stuff.

In short, it's a great free AI to help you with Docker tasks, but if you're paying for ChatGPT/Claude/Windsurf/Cursor, you'll likely skip Gordon AI for what's built into your dev tools. The MCP feature to see container resources and add abilities on-the-fly to 3rd party tools is great, if you're not using MCP in your IDE already.

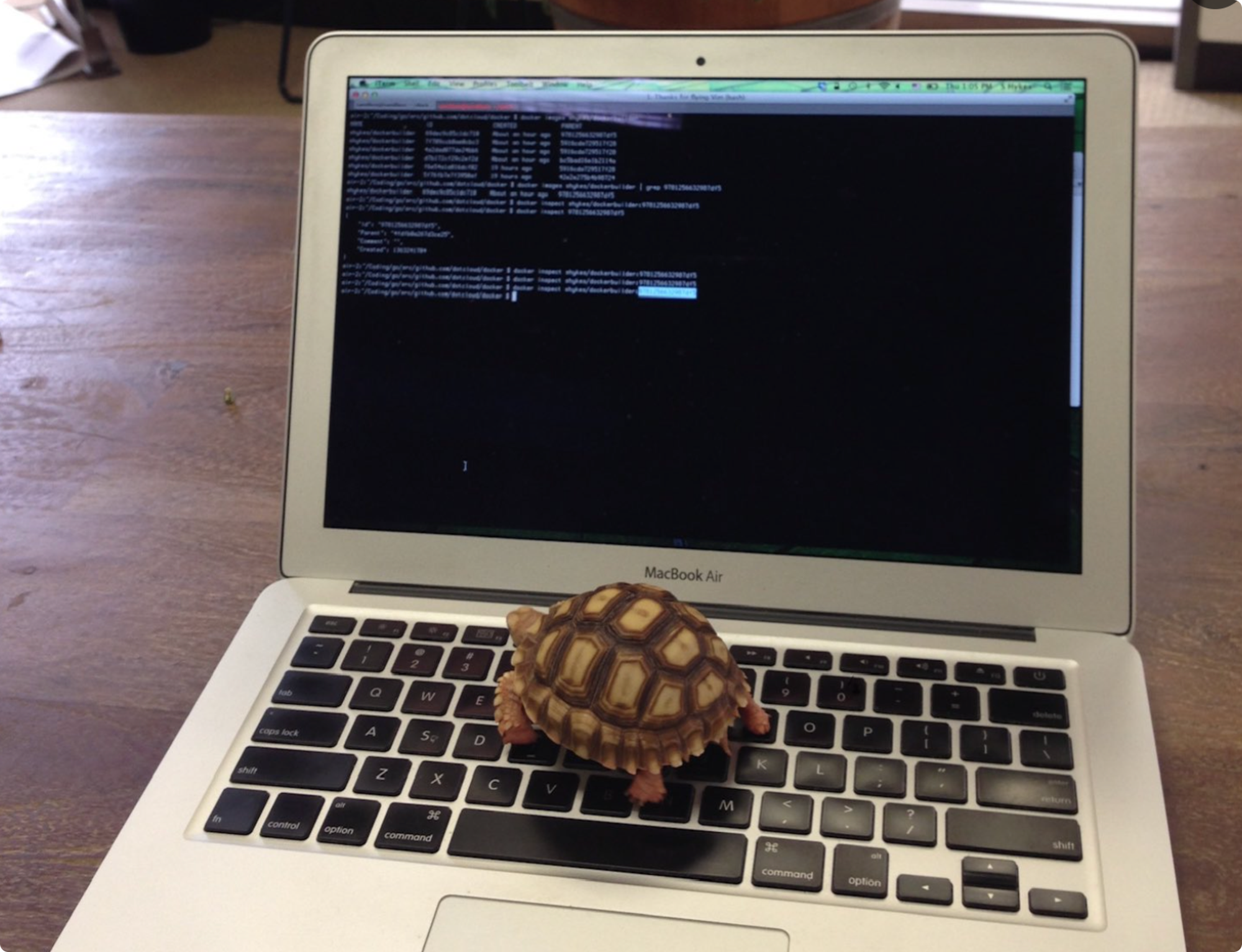

A note on the name Gordon...

Why is the AI called Gordon? Well, Gordon is the name of Docker's real-life turtle mascot (with her own Twitter account), which sadly died a few years ago (great story and pics from her human family). She'll live on as Docker's AI and mascot.

Image's copyright of Docker Inc.

AI Feature #2: Docker Model Runner (DMR)

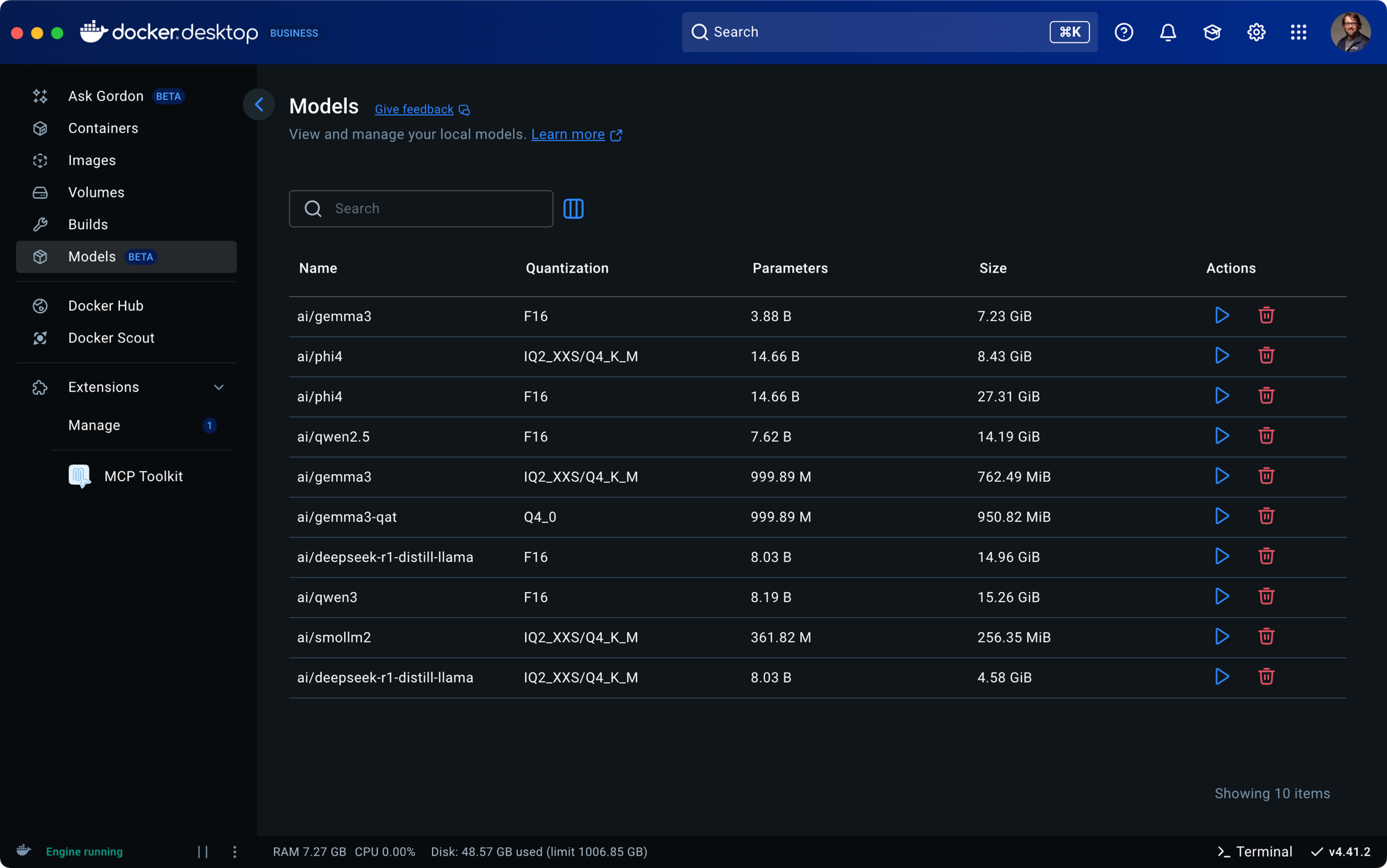

A new docker model command that lets you pull popular LLMs and run them locally, for access from your local containers or the host OS.

Below is the 3-minute version explaining how it works:

Below is a longer version explaining the architecture, sub-commands, and how to run a ChatGPT clone locally with my example at https://bret.show/dmr-quickstart

🎧 Podcast audio-only version of DMR

Ep 180: Docker Model Runner

A podcast episode I recorded explaining DMR, when you would use it, and how it works.

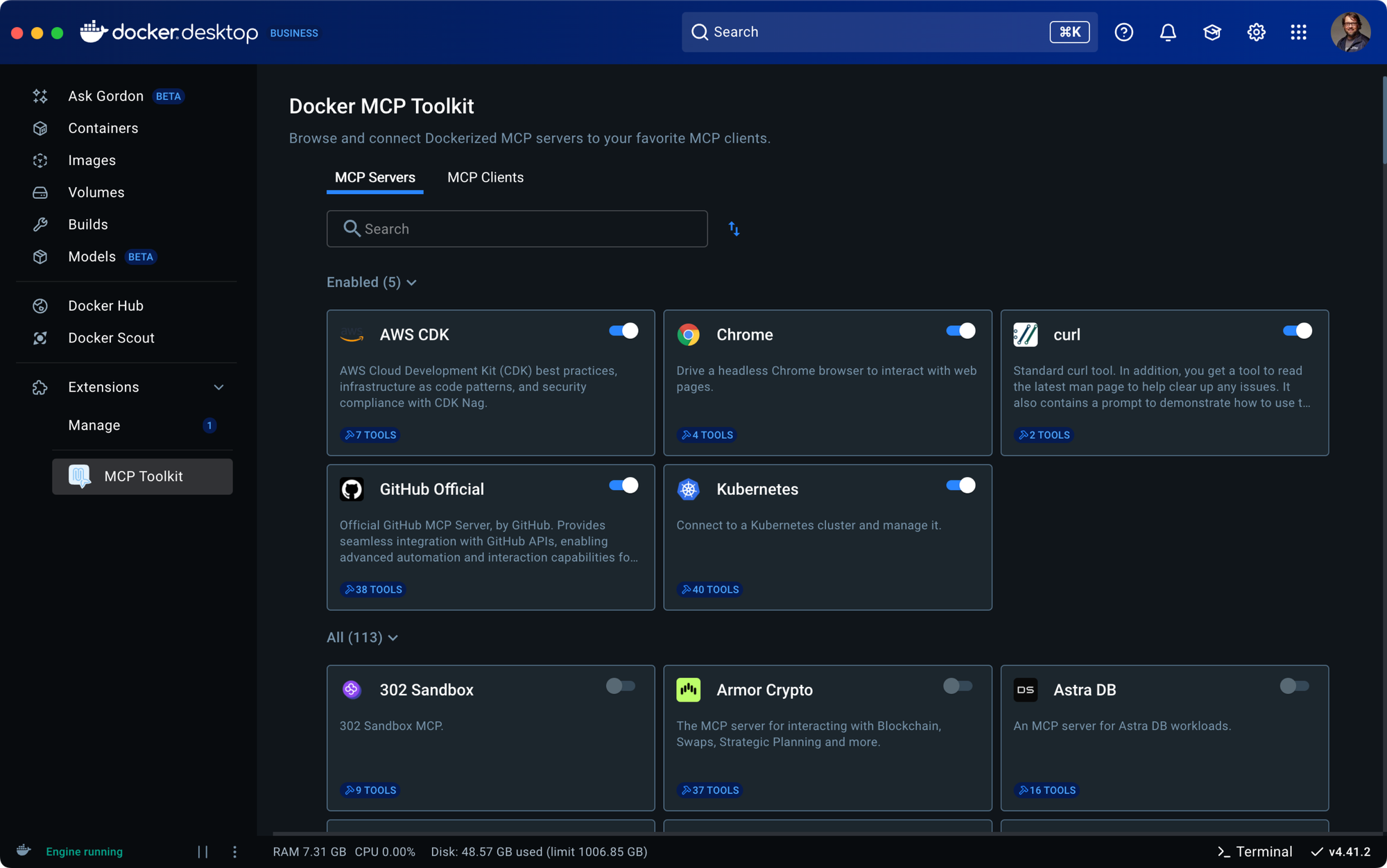

AI Feature #3: MCP Toolkit

This is the most recent release (I don't have videos yet) and could end up being the biggest deal. It's also the most confusing if you're not fully versed in how AI has evolved in the last 7 months. A few things you need to know before digging into this feature:

Agentic AI

In 2024, the term "Agentic AI" became the way we describe an LLM not just writing text, but actually performing work on our behalf. Running commands in the shell was the first "tool" many of us saw an LLM use, and suddenly, just months later, nearly every code editor and chatbot has access to hundreds of "tools."

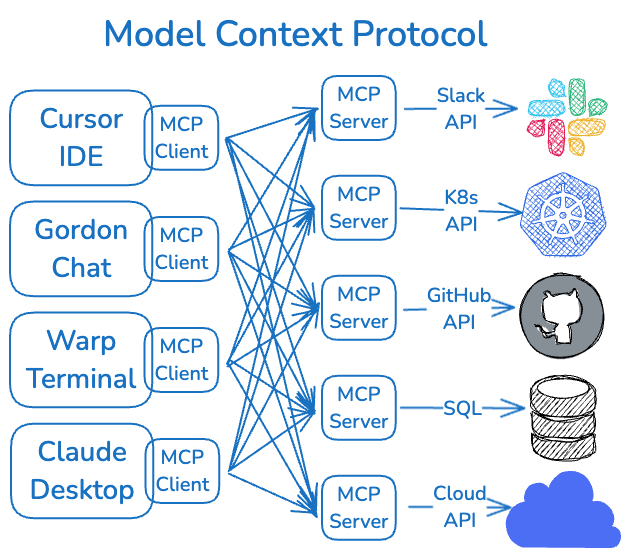

Model Context Protocol

Second, the MCP (Model Context Protocol) was released by Anthropic as their idea for how models (LLMs) could access tools and data. It allows a model to understand the functions that tools could perform, and, most importantly, execute those functions (or retrieve the data in a human-readable way). I hate to call MCP a standard yet, but its popularity took off so fast as the only universal way to get models and tools working together, it's the de facto standard just 6 months later. Every tool out there, including AWS's APIs, GitHub's APIs, Kubernetes, IDEs, and many CLIs, already have MCPs. Think of MCP as a proxy between the LLM and the tool/API you want it to control. It allows the LLM to interact with any external tool/API in a common way.

In practice, the idea of Agentic Ai and the invention of MCP means we can all-of-a-sudden, ask a chat bot to "use curl 20 times to check the reponse of docker.com, then average that reponse, and then compare that to the storage averages from my SQL table, and then store the result difference in a Notion page." The LLM, assuming it has access to MCP servers for curl, a SQL db, and Notion's API, will be able to perform that work in a series of steps based on a single prompt we typed up.

In a world where just a few months ago, we would have to write a program to do that for us, or at best, spend hours in Zapier to get the right workflow to fire... to be all replaced by a 10-second prompt we wrote on the fly, is hard to believe.

But MCP is here, and we're about to be hit by a flood of real-world use cases (and products to buy) that use LLMs + MCP to solve a near-unimaginable amount of workflow scenarios.

And I'm SOOOO here for it. Expect me to share A LOT about this huge shift in capability for devs, CI, CD, operations, SRE, platform building, and more.

Gordon AI + MCP Toolkit

How to get started using an LLM with MCP tool calling

To get all these AI things working together, you need something with access to an LLM. Gordon is an "MCP Client" that lets you chat with a model in the Docker Desktop Dashboard, so we'll use it. Then we need to give it access to the MCP tools so it can do more than just answer questions:

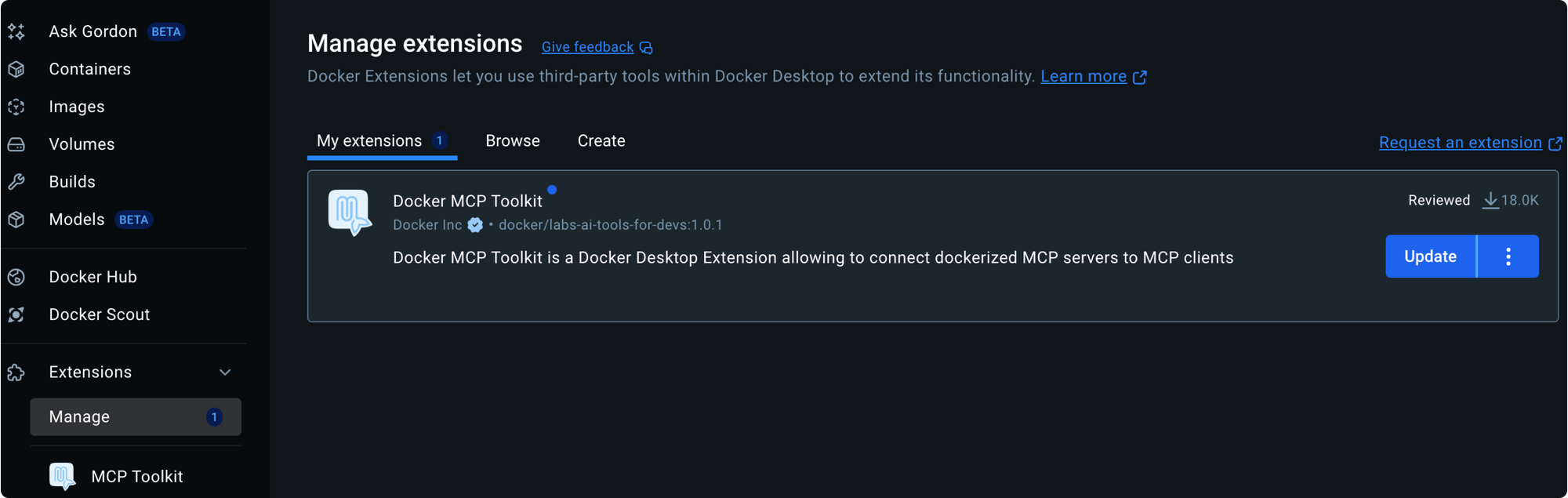

- Add the MCP Toolkit Extension to the Dashboard. This lets us run MCP-enabled tools as containers behind a MCP Server proxy that Docker Desktop runs for us.

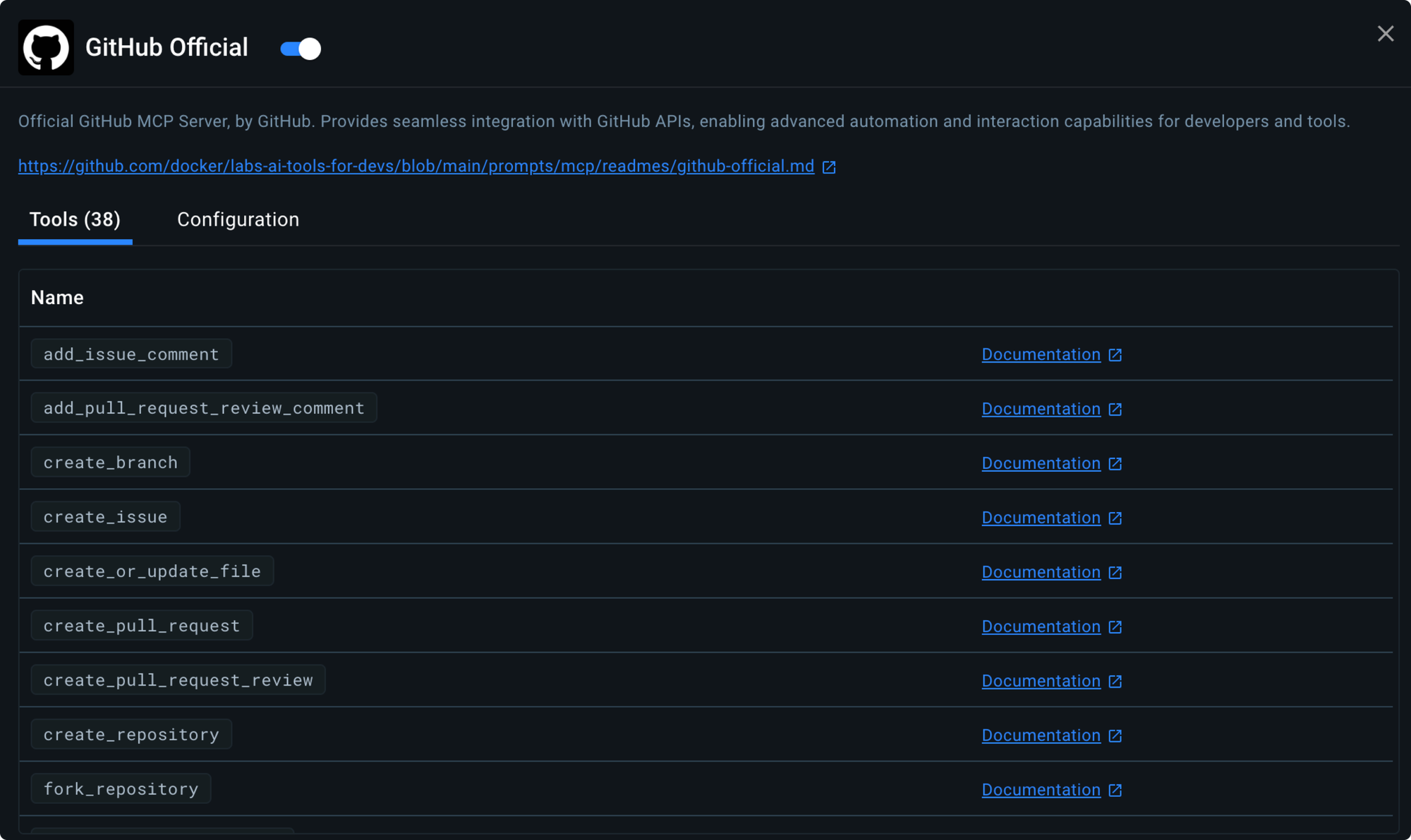

- Open the extension and enable some of your favorite tools. Notice how you can click the little blue box to see a list of tools (capabilities) that the MCP Server supports. These are the actions you can tell an LLM to take. I'll add the GitHub one after giving it a PAT to access the GitHub API.

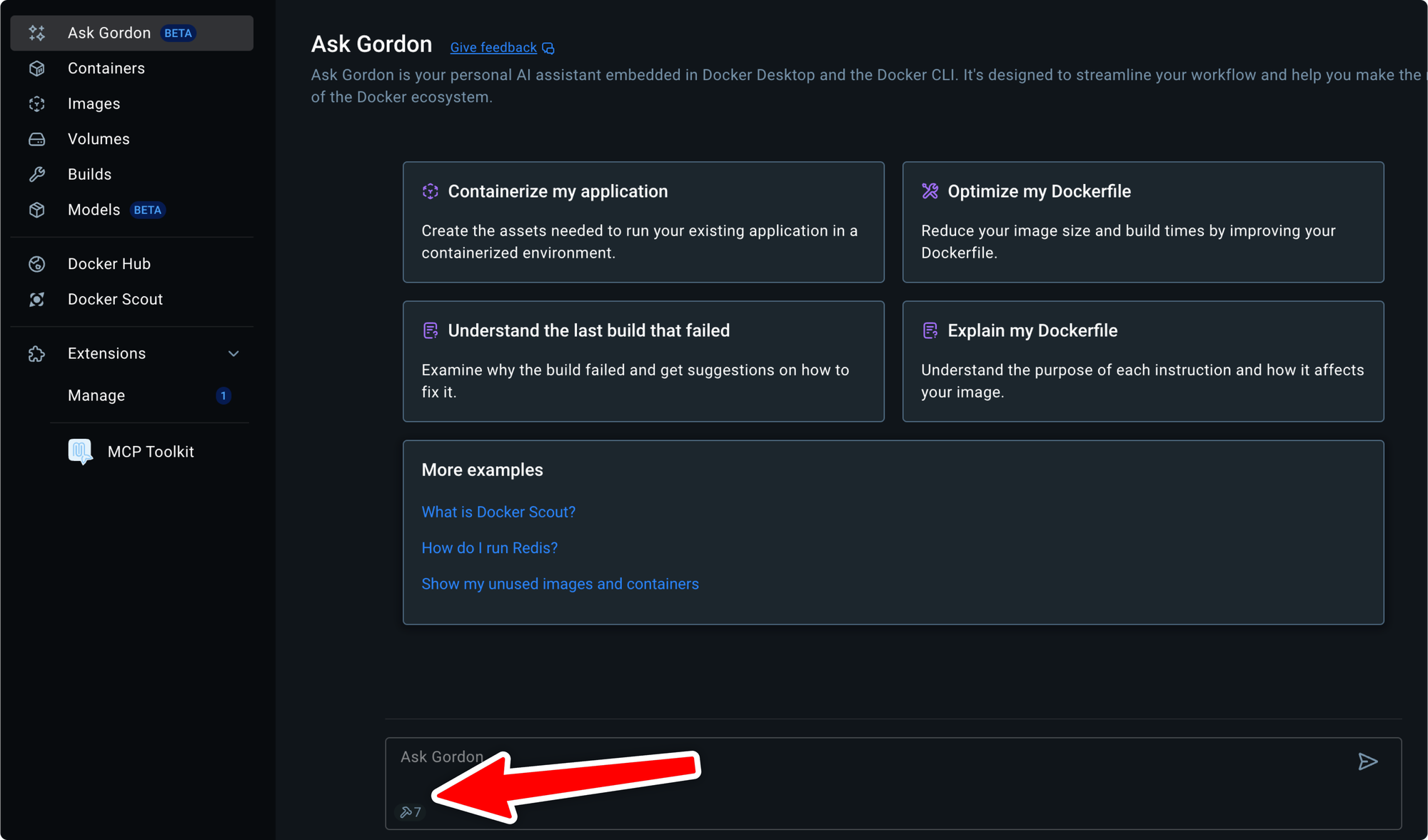

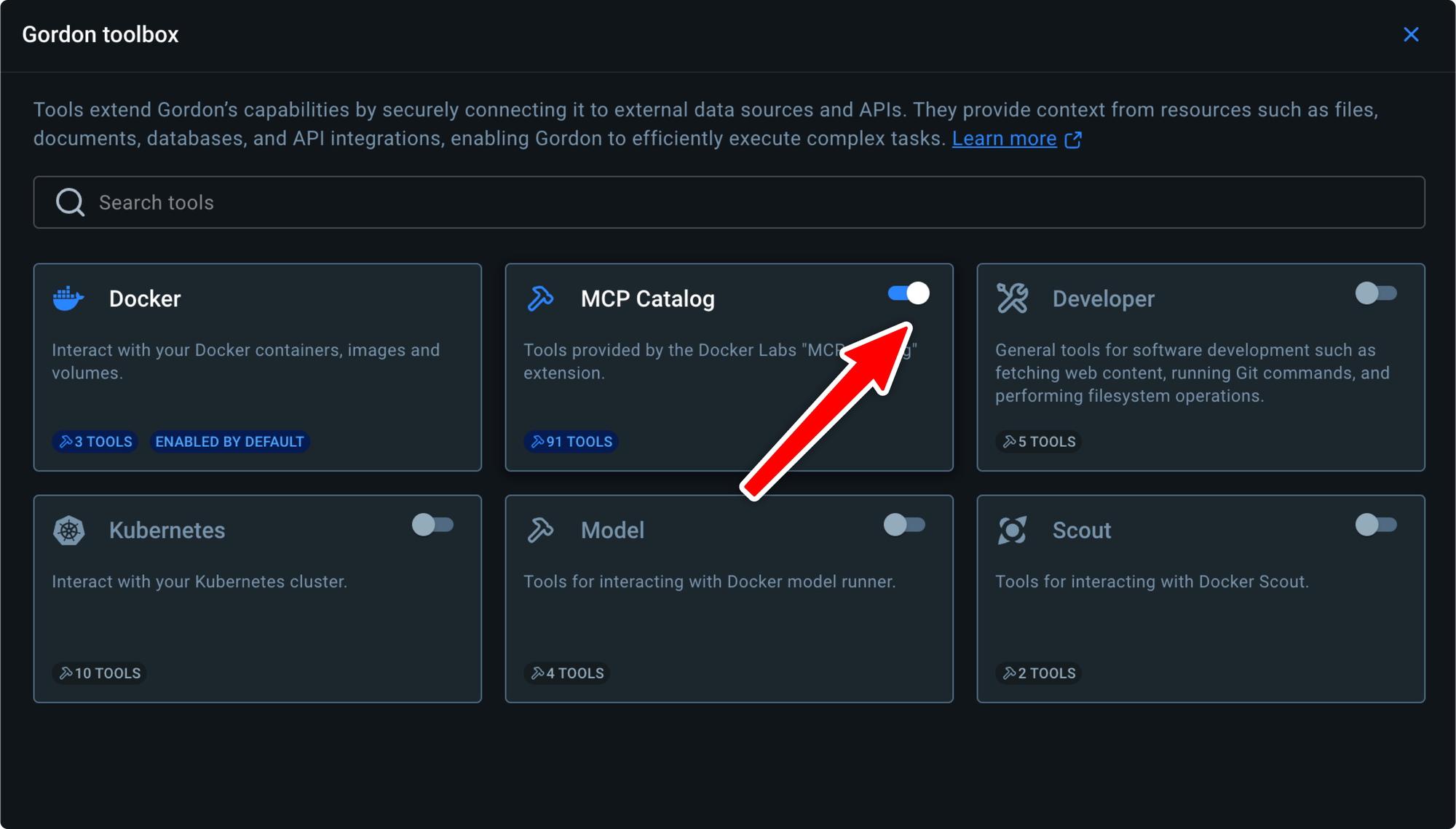

- Now we need to connect those MCP Servers to a "MCP Client", which is something with an LLM, like Cursor, Claude Desktop, or the easiest is to enable the Gordon AI. Gordon is now an MCP Client and comes out of the box knowing how to control Docker, but it also has other tools in its toolbox. Click the little tool hammer in the Gordon chat box and turn on "MCP Catalog", which gives it all the tools you've enabled in the MCP Toolbox extension.

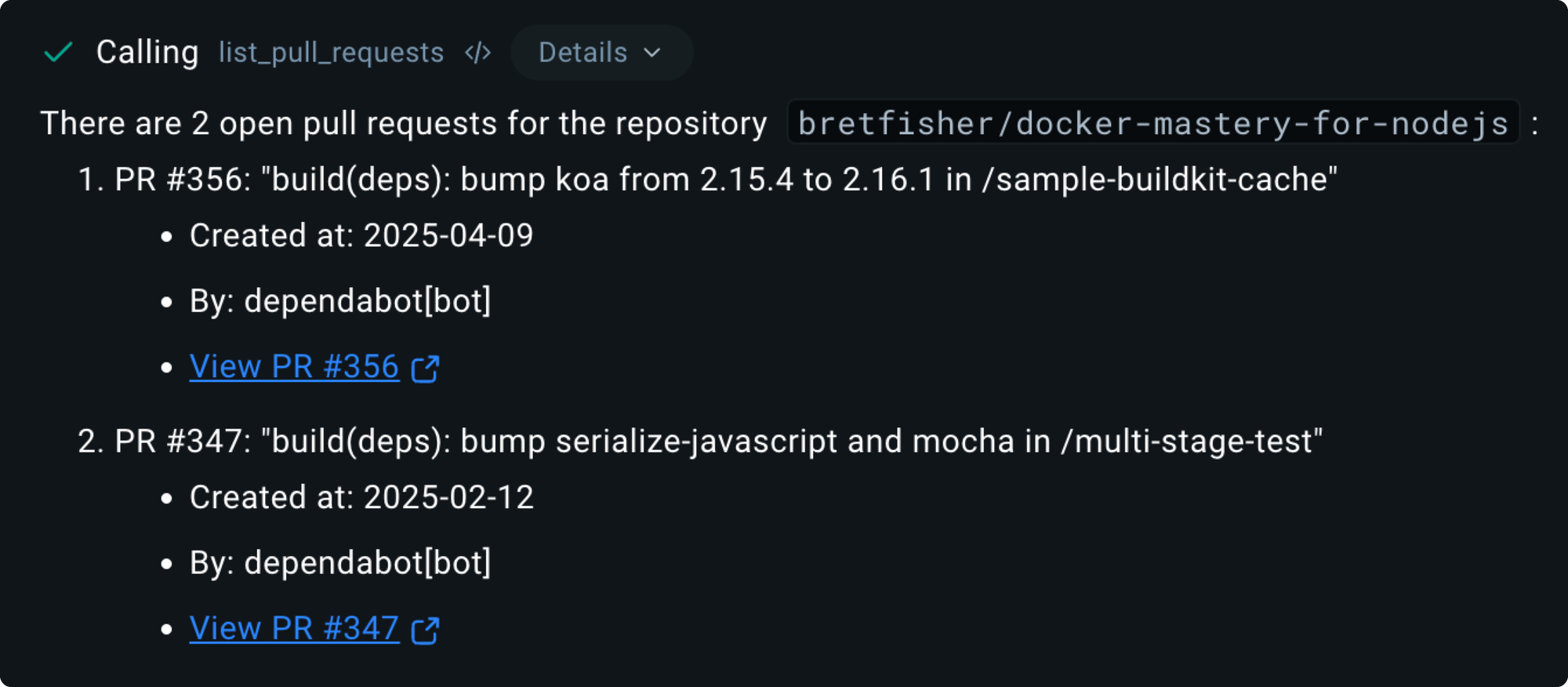

- Now you can ask Gordon AI to do something with GitHub.

List all the open PRs for my repo bretfisher/docker-mastery-for-nodejs

please merge PR #356 in repo bretfisher/docker-mastery-for-nodejs

This reminds me of the "ChatOps" hype a decade ago, only now we have way more flexibility and reach. I can't wait to spend more time reducing toil with MCP tools.

That's it for this week!