As a Docker Captain and a Udemy instructor to 300,000 Docker and Kubernetes students, the Kubernetes vs. Docker topic comes up a lot. Like me, the tech world is enamored with container tooling, of which Docker and Kubernetes are the two top brands.

Versus isn’t the right question, though, because using these tools isn’t an either-or equation.

Consider these definitions:

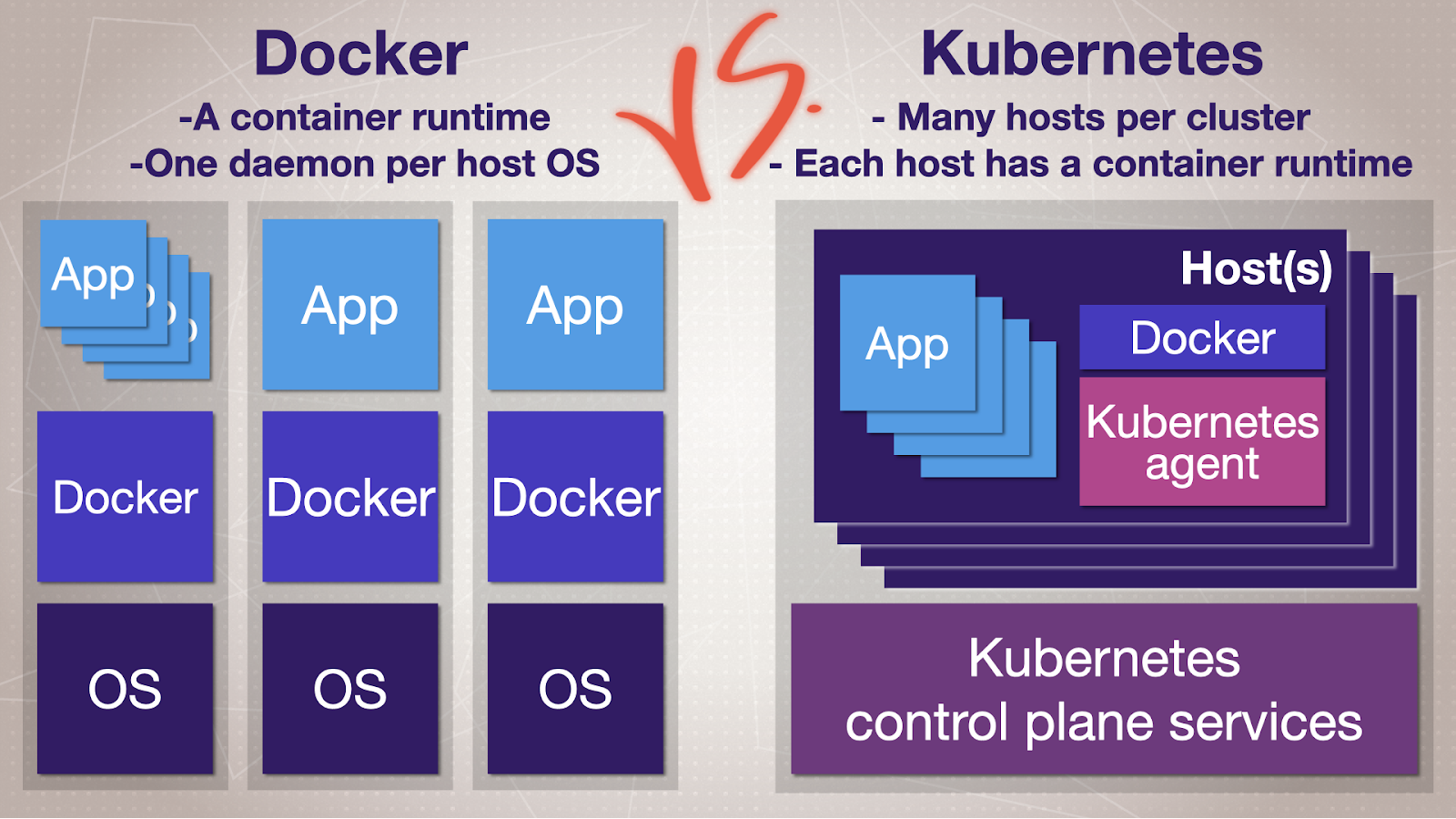

- Docker Engine is a “container runtime.” It knows how to run and manage containers on a single operating system host. You must control each host separately.

- Kubernetes (K8s) is an open-source “container orchestration platform” (AKA orchestrator). It knows how to manage containers across many operating system hosts, which in turn, run their own container runtime.

There’s a lot to unpack in those statements. In this post, I’ll address questions like the following:

- What are these tools, and why are they important?

- Is “Docker Engine” different from Docker?

- Does everyone need both Docker and Kubernetes?

- What are their alternatives?

- What about serverless, and how are containers related?

Let’s dig in!

Docker origins

In 2013, Solomon Hykes, CEO of Dotcloud, announced the Docker open source project. When it hit 1.0 in 2014, I gave it a try. It was “love at first deploy.”

The more I understood about its features, the more I wanted to use Docker everywhere.

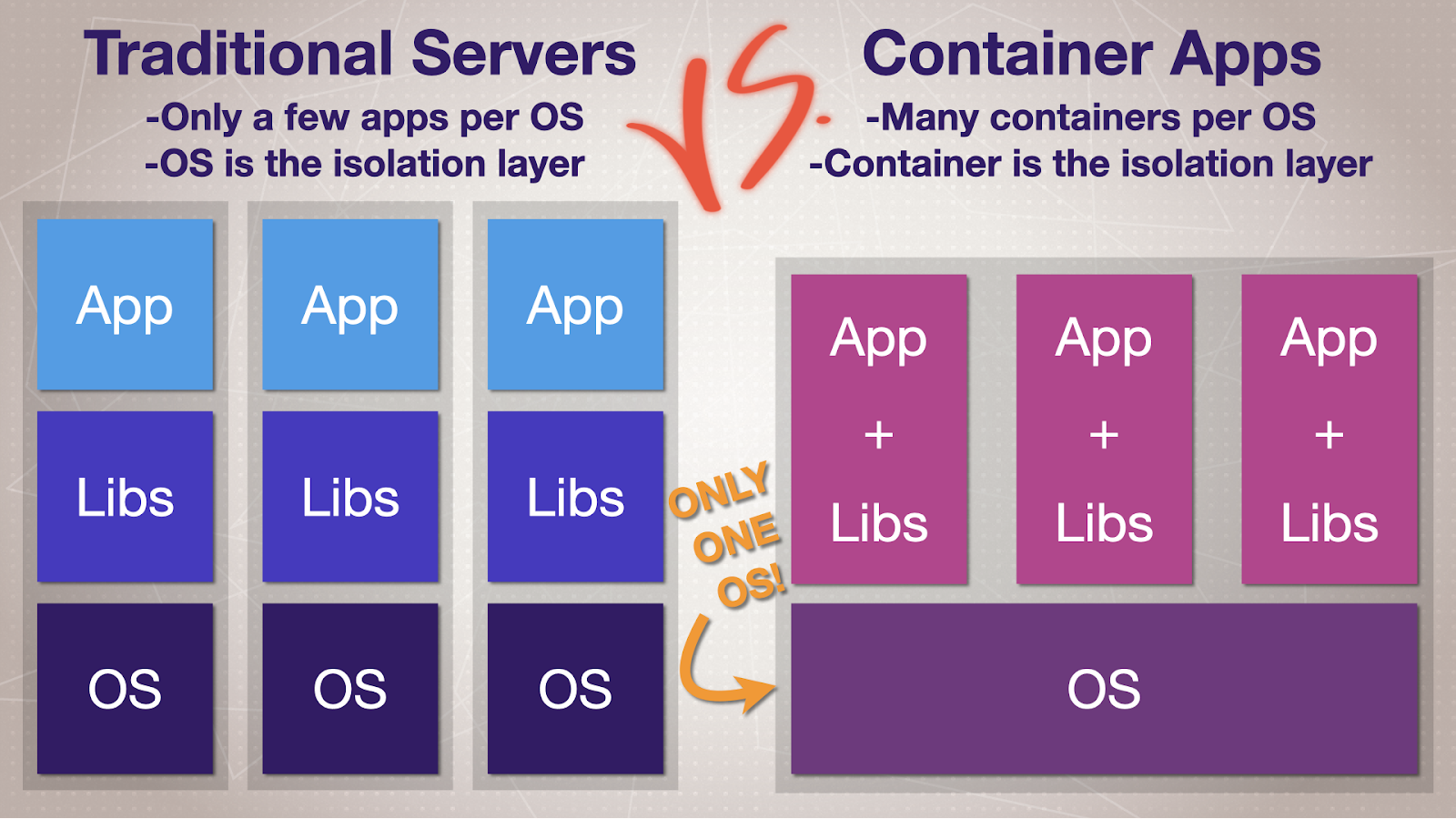

The concept of containerized applications – using containers to isolate many apps from each other on a single server – has existed for decades. Yet, there wasn’t an easy-to-use tool that focused on the full application lifecycle until Docker.

The team that created Docker took complex tools and wrapped them in a command-line interface (CLI) that mere mortals could understand.

What’s Docker Engine got to do with Docker?

Soon after Docker’s release, the founders scrapped Dotcloud and created Docker Inc. They now make a line of products with Docker in the name.

In this post, we’ll focus on the original project, now called Docker Engine. This is what people are usually talking about when they say “Docker,” and what I’ll refer to by default in this article.

Note that Docker also makes Docker Desktop, a bundle of products for Windows, Mac, and Linux desktops. Docker Desktop is the easiest way to run Docker and Kubernetes on your local machine for dev and testing server apps. Millions of people use it every month, and I recommend it.

Docker’s three key innovations

Docker invented three processes for containerized applications that we previously couldn’t do easily in a single command-line and server daemon:

1. Easy app packaging

Docker takes your application and all of its software dependencies – minus the OS kernel and hardware drivers – and bundles them in a set of tarballs (similar to zip files). Docker ensures these tarballs are identical on every machine they run on using cryptographic hashing.

The app tarball(s) and metadata are known together as a container image. This is now considered the modern way to package server apps. The container image standard is a major improvement compared to previous packaging systems (like apt, yum, msi, npm, maven, etc.) that don’t contain application dependencies.

2. Easy app running

Docker runs each container in an isolated file system. Each container gets its own networking and resource limits and can’t see anything else on the host operating system. It can do this in a single, short command.

This opinionated approach to containers keeps the CLI friendly and easy to use for beginners. It’s the secret sauce that has made Docker internet-famous with developers.

3. Easy app distribution

Docker created the idea of a “container image registry,” which allows you to store images in a central HTTP/S server and push/pull them as easily as doing so with git commits. The most popular registry is Docker Hub and you can find many open source projects with official images there.

The idea of a built-in push/pull for your app images was an instant hit, and now container image registries are everywhere. It’s like the server version of an Apple/Google App Store.

Docker’s original three innovations helped make it the #1 most wanted platform for multiple years in the Stack Overflow developer survey. As a result, Docker Engine gives us this great, easy-to-use command-line for humans to do many things related to containerized applications, including:

- Create container images with a built-in builder (similar to make or bash scripts)

- Push and pull images from registries

- Create and manage containers on a single machine

- Create virtual networks for containers to communicate on the same host

- Export/import images for offline transport

- Run custom commands in existing containers

- Centralize and access app logs

- And so much more!

If Docker is so great, why do we need Kubernetes?

You’ll find that once you start using containers, you’ll want to use them everywhere – and once you have more than a few servers, you’ll start wishing for an easier way to run multiple related containers for your apps.

With Docker, you still need to manually set up networks between servers, including security policies, DNS, storage, load balancers, backups, monitoring, and more. When it comes to dozens of related containers, this creates as much work (if not more) as we had before containerized applications.

This multi-server problem is what the Kubernetes founders wanted to solve. They knew that in order to make production container systems as easy as Docker is for a single machine, they needed to address the underlying hardware and network resources. Kubernetes is an open-source multi-server clustering system. It orchestrates all the tools required for a real-world solution hosting dozens, hundreds, or even thousands of apps. Think of it as an abstraction, hiding away all the necessary parts of the process to run multiple containerized applications.

Kubernetes is to a group of servers what Docker is to a single server. It’s a set of programs and APIs that control many Docker Engines from one CLI.

Kubernetes orchestrates your containers using the paradigm of the “desired state.” You give it your desired outcome (such as “Run 5 Apache web servers”), and it figures out the best way to do that across your Kubernetes cluster. It then ensures those containers are always running. If one fails, it’ll recreate to ensure your desired state matches its actual state.

Kubernetes has a “control plane” of default services, which themselves can run as Docker containers. They control one or more machines (Linux and Windows operating systems) with an agent.

Kubernetes’ main job is to tell Docker what to do, yet it now provides an increasing number of related duties. Other built-in features include container health checks, auto-replacing failed apps, automating web proxy configurations, managing network security policies, and even auto-provisioning external storage.

Do you want even more?

One of the most significant features in Kubernetes is its extensibility in the form of Custom Resource Definitions (CRDs). CRDs allow you to add new types of objects and functionality to become first-class Kubernetes features. CRDs turned Kubernetes from a container manager into a jack-of-all-trades automation engine for everything related to managing the full app lifecycle.

Some CRD plugins are advanced enough that the community has come up with a new term to describe them: Kubernetes Operators.

Need to provision, manage, and upgrade a multi-node Postgres SQL cluster? Operators can do that. Need a backup solution for the apps and a cluster disaster-recovery tool? Kubernetes Operators can do that, too.

Using Docker with Kubernetes

Now that you know where these tools come from and what problems they solve, the question is: Should you use them together?

Yes. For now.

If you only need to control containers on a single machine, and you’re new to containers, Docker is the best choice. Keep it simple to start, and you can grow into an orchestrator later. If you need to run multiple containers for your apps, it may be time to consider Kubernetes.

Should you install Kubernetes on top of Docker?

Referring back to my initial definitions, remember that Kubernetes requires a container runtime to manage containers on its behalf. Before you install Kubernetes, you must decide which runtime to use.

For nearly every sysadmin building clusters, Docker has been the default since the beginning of Kubernetes. There have been various other runtime options for Kubernetes, but Docker tends to have the broadest feature support as a container runtime, especially on Windows Server.

The truth is, though, you often won’t need to worry about choosing a container runtime. Kubernetes is unique in that it’s an ecosystem of choices rather than a single download.

Here are two significant distinctions that set Kubernetes apart from typical open source projects:

- Kubernetes is a set of choices that come together to form the control plane. Some parts are standard, like the Kubernetes API, Scheduler, and Kubelet agent. Other pieces have no default and require choosing from many industry options, like container runtime, networking, and ingress proxy.

- My recommendation when adopting Kubernetes is to choose from the over 100 distributions of the Kubernetes control plane, ideally picking one that aligns with your existing vendors and requirements. Each distribution bundles a set of these choices together and decides what’s best for their customers. They often provide a more pleasant out-of-box experience with additional tooling to help with deployment, upgrades, and more. These distributions are often much more than the vanilla upstream Kubernetes you’ll find on GitHub, and I recommend you try them first.

Most professionals choose Linux distributions (Ubuntu, CentOS, RHEL, etc.) to run the Linux Kernel. Likewise, many people choose Kubernetes distributions to run Kubernetes. The Kubernetes control plane and plugins are like a Linux distribution; there will only be a few of us that can manually put all the pieces together to make a complete, stable, and secure solution without needing a distribution. Most companies don’t need that level of complexity anyway.

Every major public cloud service offers its distribution-as-a-service, like AWS EKS, Azure AKS, DigitalOcean Kubernetes, etc. There are various vendor-specific (VMWare, RedHat, NetApp) and vendor-neutral options (Rancher, Docker Enterprise, k3s). Many of these Kubernetes distributions, especially the cloud distributions, decide the container runtime for you.

Using Kubernetes without Docker

Once you choose to run Kubernetes, you’ll rarely touch the CLI for your container runtime. It becomes a utility API that’s managed by Kubernetes. Docker has a broad set of features that Kubernetes will never use, and many are looking for a smaller purpose-fit container runtime that isn’t full of all the “user-focused” features of Docker.

Kubernetes wants a minimal container management API to do the grunt work. Kubernetes even created an API standard called Container Runtime Interface (CRI) to encourage more runtimes besides Docker. As alternatives have matured, we’ve seen Docker usage as the CRI in Kubernetes clusters drop as other runtimes have emerged. In 2019, the Sysdig usage report showed that 79% of containers are run by the Docker Engine.

Remember containerd? It’s that sub-process of Docker that does the grunt work of talking to the Linux Kernel. Well, containerd is now the 2nd most popular Kubernetes runtime. It’s quite popular as a minimal alternative to Docker Engine. Cloud service providers often use it as the default because of its small footprint and purely open-source design and oversight. It doesn’t aim to be user-friendly to humans directly, but rather to act as the middleware between your containers and tools like Kubernetes.

There’s also CRI-O, a runtime created by Red Hat designed exclusively for Kubernetes. It’s the default when using the Red Hat OpenShift distribution of Kubernetes.

By the way: don’t confuse this shift in server cluster CRIs with local desktop usage by developers and sysadmins. Because the container image is a standard, you can use different tools to run them based on your needs without worrying about compatibility.

With Docker’s human-friendly approach, I still see it used by everyone I meet as their standard way to run containers locally (mainly through Docker Desktop and Docker Compose).

Do you even need Kubernetes?

You’ll hear people (especially me) talk about how complex Kubernetes can be. The two biggest drawbacks of Kubernetes are the complexity of learning and managing Kubernetes clusters and the extra overhead of hardware resources it requires. These make a big difference, so much that it sometimes drives my students and clients to look at alternative approaches.

The fact is that operators wrote Kubernetes for operators. It can be difficult to jump from the friendly developer-focused Docker tooling to the verbose and un-opinionated world of Kubernetes. Projects are trying to solve the hard parts like k3s and Rio, but it’s far from easy if you’re managing a custom cluster yourself. Kubernetes can support large and complex requirements. That also means it’s likely unnecessary if all you need is a few dozen containers and three to five servers.

I often work with engineering teams responsible for creating their infrastructure. We usually spend much of our time figuring out whether we need Kubernetes at all. Kubernetes is undoubtedly the most popular container multi-server orchestrator in most circles, but there are notable alternatives such as:

- AWS ECS – Amazon created this proprietary solution before Kubernetes was popular, and it has stuck around as the more straightforward alternative to AWS EKS.

- Docker Swarm – Now maintained by Mirantis, this built-in feature of Docker Engine works great for many of my Docker students. It’s known for its ease of setup and app deployment. It’s slow to progress on new features, yet plenty of businesses rely on it daily.

- HashiCorp Nomad – It has a loyal fanbase and a steady stream of updates.

Personally, I’m a fan of both Docker Swarm and anything HashiCorp makes.

What about serverless?

If your team is already going this route, that’s great. Under the hood of most serverless infrastructure, it’s running your functions in containers.

Serverless treats containers and orchestrators as lower-level abstractions that someone else will manage.

You get to enjoy running your functions on top of those containers and orchestrators that manage all the overhead to save your team time controlling servers. Abstractions like this remove the infrastructure plumbing and are likely the future for many of us.

Remember, tools aren’t the end goal.

Regardless of whether you choose Docker, Kubernetes, or serverless, remember that the tool isn’t the ultimate objective. Always keep your business’s DevOps goals in mind, and try not to get distracted by the “shiny” appeal of advanced tools just for the sake of using cool new tech.

Container implementation project metrics usually focus on increasing speed while also increasing reliability.

The goals I tend to focus on when implementing these tools include:

- Increasing agility – time from business idea to customer delivery

- Reducing the time-to-deploy – time from commit to server deployment

- Increasing resiliency – how often you have failures, but more importantly, how quickly they are fixed

Containers and their tools can improve all of those factors. Still, they will come with their own “new problems” and additional workload. Be aware of the trade-offs when choosing your tools.

I wish you good luck in your container journey, and may all your deployments be successful!

I originally posted this article on Udemy’s Blog as Kubernetes vs. Docker: The Full Guide from a DevOps and Container Consultant